The quality of an AI Agent’s output in Shibumi is directly shaped by the quality of its prompt. A well-crafted prompt provides clear context, leverages relevant data through injected expressions, and defines a structured, purposeful output. These elements help the AI understand what it’s analyzing, why it matters, and how to respond effectively.

In contrast, a vague or incomplete prompt leaves the AI without sufficient direction. Missing context, undefined structure, or a lack of meaningful attribute references can result in generic or inconsistent responses that fail to add real value.

Good Prompt vs. Bad Prompt: Understanding the Impact of Clarity

This section compares a weak prompt and strong one side by side, demonstrating how thoughtful prompting – built on the principles of context, data grounding, and clarity – transforms the AI’s performance. You’ll see how a few deliberate adjustments can elevate results from simple summaries to insightful, decision-ready outputs.

Bad Prompt Example

Prompt:

Summarize the current status.

Why it performs poorly:

- No context – The AI doesn’t know what level of work item it’s summarizing (Initiative, Workstream, or Program).

- No injected data – Without expressions referencing live attribute data (e.g., {Status__c}, {Stage__c}, etc.), the Ai has nothing concrete to work from.

- Undefined output structure – The AI isn’t told what to include, how long to make it, or who the audience is.

- Lacks purpose – The AI can’t infer whether this summary is for an executive update, operational review, or simple recordkeeping.

The resulting output is vague, inconsistent, and often irrelevant:

[ The status is progressing. More work is needed. ]

Good Prompt Example

Prompt:

Summarize the current {Initiative__t.name} status based on {Status__c}, {Due_Date__c}, and {Progress__c}.

Include 1-2 key accomplishments, review descendant milestone status and due dates, any current risks or blockers, and the next steps to be taken.

Keep the summary under 100 words and suitable for an executive audience.

Why it performs well:

- Clear context – The AI knows that level of the hierarchy it’s summarizing and why.

- Grounded in data – Injected expressions give the AI real values to interpret.

- Structured output – Defined sections (accomplishments, risks, next steps) guide the narrative.

- Concise and audience-aware – The tone and length are optimized for executive reporting.

- Reusable – This prompt can run consistently across similar work items, ensuring reliable summaries over time.

The resulting output is concise, relevant, and action-oriented:

[ This Initiative is progressing as planned with key milestones completed ahead of schedule. A potential delay in vendor approval poses a moderate risk. Next step: finalize scope sign-off by next week to stay on track for delivery. ]

Key Takeaway

The difference between these two prompts highlights the importance of:

- Providing context to define what the AI is doing

- Using injected expressions and descendant detail to ground the response in real-time data

- Establishing structure to ensure consistent, actionable outputs

- Framing the audience and intent so the time and focus align with business needs

By following these principles, AI Agents configured in Shibumi will deliver outputs that are not only more accurate – but directly valuable to program leaders and stakeholders.

The Ideal Prompt: A Model of Structured Clarity

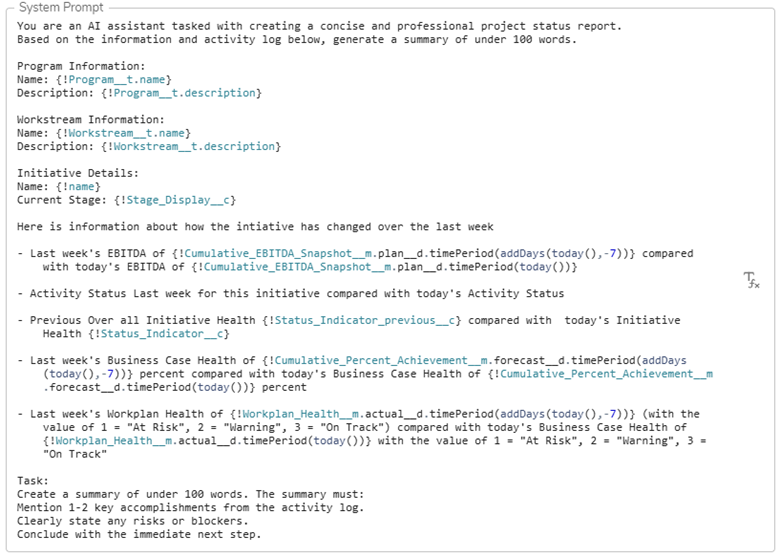

The following example demonstrates a fully optimized prompt designed for the Summarization Agent in Shibumi. It blends data-driven context, structured instruction, and clear output expectations – setting the standard for reliable, high-quality AI results.

Why This Prompt Excels

Clear Role Definition

The opening line – “You are an AI Assistant tasked with creating a concise and professional project status report” – established purpose and tone. This ensures the AI understands it is producing a formal, business-oriented summary rather than a casual or analytical response.

Hierarchical Context

By including Program, Workstream, and Initiative information, the prompt situates the AI within Shibumi’s hierarchy. This precents ambiguity about what level of the solution the Agent is summarizing and allows the AI to reference the appropriate scope in its wording (e.g., “Within the Operations Workstream…”).

Intelligent Use of Injected Expressions

Expressions such as {!Status_Indicator__c}, {!Cumulative_EBITDA_Snapshot__m.plan__d.timePeriod(today())}, and {!Workplan_Health__m.actual__d.timePeriod(today())} dynamically feed real, contextual data into the prompt. This makes the summary data-driven and ensures that each execution reflects current metrics rather than static text.

Temporal Comparison for Insight

By providing last week’s and today’s values, the prompt enables the AI to describe change over time – a key differentiator from basic summarization. This structure encourages narrative continuity and makes updates more meaningful to leadership audiences.

Explicit Output Structure

The “Task” section defines exactly what the summary must include:

- Key accomplishments

- Risks or blockers

- Immediate next steps

This gives the AI a checklist to follow, resulting in predictable, well-organized responses across Initiatives and time periods.

Word Limit for Consistency

Setting a strict length constraint (“under 100 words”) ensures uniform summaries that fit within dashboards, emails, or executive reports – avoiding the drift toward overly long or inconsistent narratives.

Multi-Level Awareness Without Overload

The prompt provides context at multiple levels (Program -> Workstream -> Initiative) but instructs the AI to focus output at the Initiative level. This balance of context and focus allows the AI to incorporate higher-level framing without diluting the Initiative-specific insight.

Summary

This prompt represents the best practice for prompting within Shibumi – clear, contextual, and intentional. It gives the AI everything it needs to generate outputs that are insightful, consistent, and directly aligned with transformation reporting needs.

By combining role framing, injected expressions, temporal comparisons, and structured instructions, this example sets the standard for all future AI Agent configurations.